Image generative transformers typically treat an image as a sequence of tokens, and decode an image sequentially following the raster scan ordering (i.e. line-by-line).

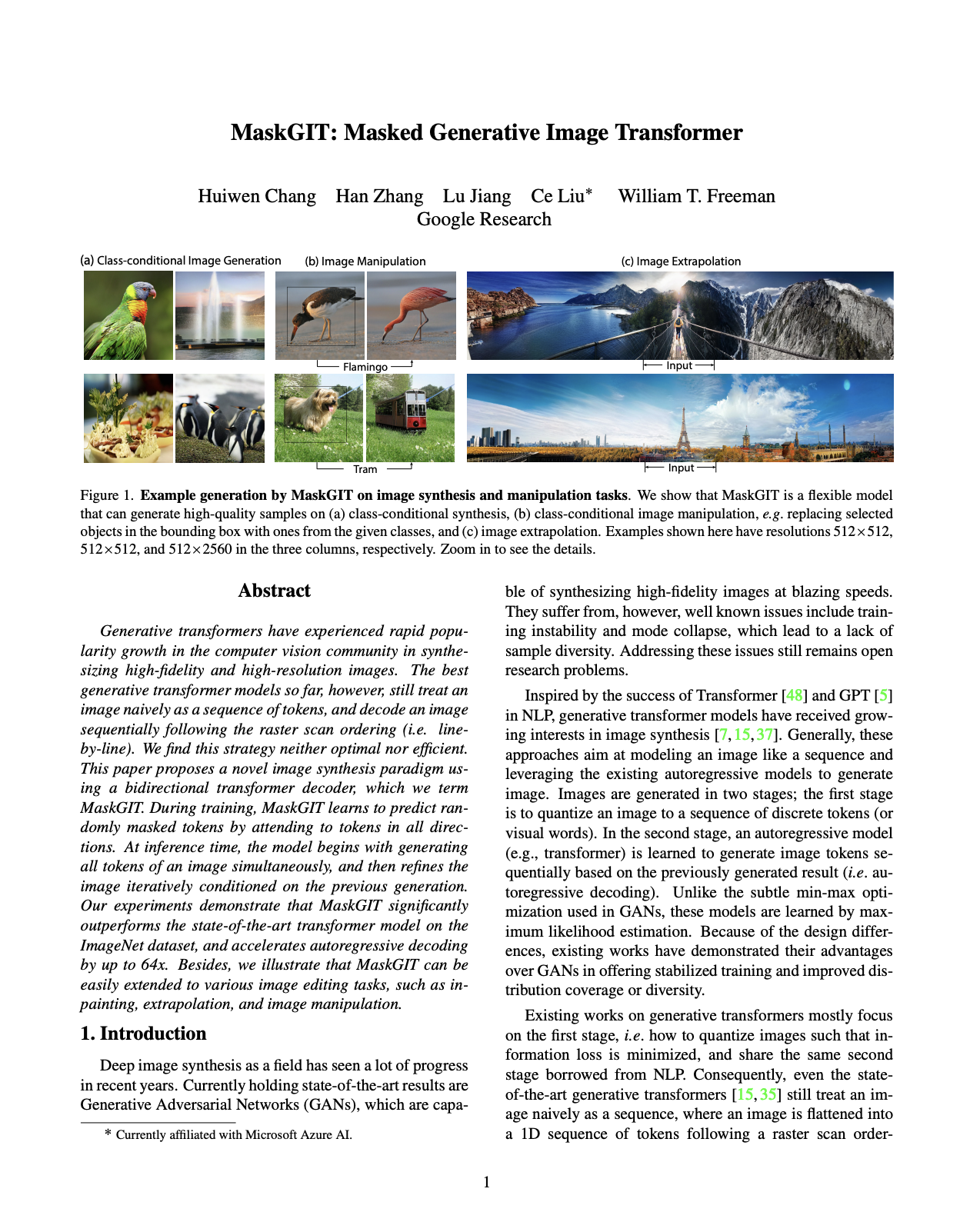

This paper proposes a novel image synthesis paradigm using a bidirectional transformer decoder, which we term MaskGIT. During training, MaskGIT learns to predict randomly masked tokens by attending to tokens in all directions. At inference time, the model begins with generating all tokens of an image simultaneously, and then refines the image iteratively conditioned on the previous generation.

Our experiments demonstrate that MaskGIT significantly outperforms the state-of-the-art transformer model on the ImageNet dataset, and accelerates autoregressive decoding by up to 64x. Besides, MaskGIT can be easily extended to various image editing tasks, such as inpainting, extrapolation, and image manipulation.